Zhangkai Ni1, Lin Ma2, Huanqiang Zeng1, Jing Chen1,Canhui Cai1, and Kai-Kuang Ma3

1School of Information Science and Engineering, Huaqiao University, Fujian, China

2Tencent AI Lab, Shenzhen

3School of Electrical and Electronic Engineering, Nanyang Technological University, Singapore

Fig. 1. Samples of the reference SCIs utilized in the SCID.

SCID: Screen Content Image Database

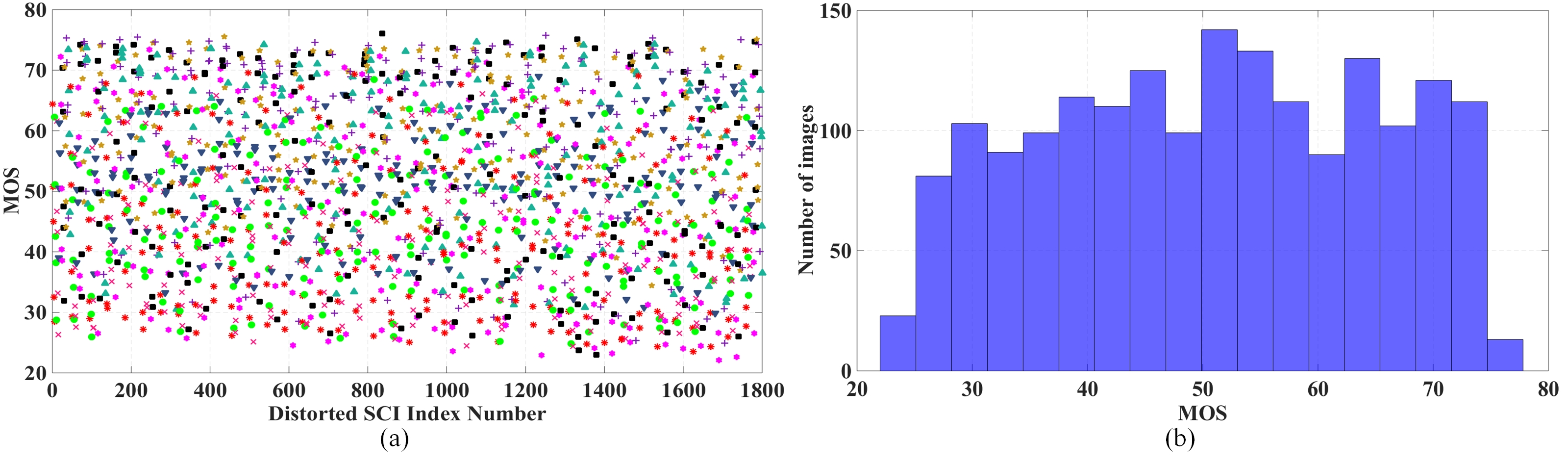

Fig. 2. The profiles of the obtained MOS in our established SCID: (a) Scatter plot of the entire 1,800 distorted SCIs and their MOS values; (b) Histogram of the MOS values of the entire 1,800 distorted SCIs.

In our proposed SCI database (denoted as SCID), both the reference SCIs and their distorted versions are included. For the former, extensive effort on searching representative SCIs over the Internet have been conducted through large manpower. With thoughtful evaluations, 40 images were selected from several hundreds of collected SCIs as the reference6 SCIs, as they cover a wide variety of combinations on various image contents, including texts, graphics, symbols, patterns, and natural images. The sources of these SCIs could come from web pages, PDF files, power-point slides, comics, digital magazines through screen snapshot, and so on. All of them are cropped to a fixed size, with the resolution of 1280��720. To conduct the performance evaluation of our proposed ESIM model, a new and the largest SCI database (denoted as SCID) is established in our work and made to the public for download. Our database contains 1,800 distorted SCIs that are generated from 40 reference SCIs. For each SCI, 9 distortion types are investigated, and 5 degradation levels are produced for each distortion type.

ESIM: Edge-SIMilarity based IQA model for SCI

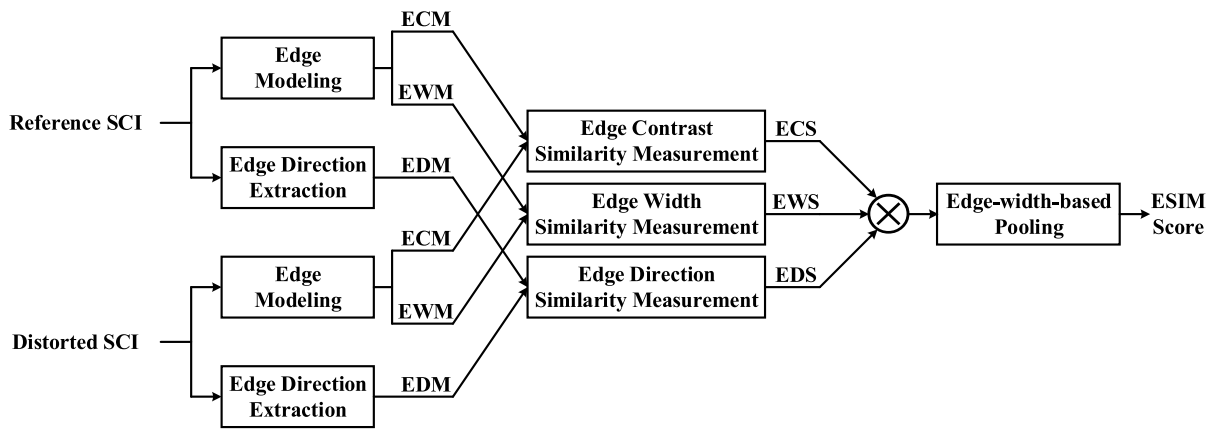

Fig. 3. The framework of our proposed ESIM for SCI.

The proposed IQA model, edge similarity (ESIM), is used for conducting an objective image quality evaluation of a given distorted SCI, with respect to its reference SCI. The flowchart of the proposed ESIM algorithm is shown in Fig. 3, which consists of three processing stages. In the first stage, a parametric edge model is used to extract two salient edge attributes, edge contrast and edge width. As a result, the extracted edge contrast and edge width information are expressed in terms of maps��i.e., the edge contrast map (ECM) and the edge width map (EWM), respectively. In addition, the edge direction is considered and incorporated into our proposed IQA model. The edge direction map (EDM) will be generated directly from each SCI via our proposed edge direction computation method. In the second stage, the computed edge feature maps, one from the reference SCI and the other from the distorted SCI, will be compared to yield their edge similarity measurement. For example, the two ECMs, respectively obtained from the distorted SCI and the reference SCI, will be compared to arrive at the edge contrast similarity (ECS) map. Likewise, the edge width similarity (EWS) map and edge direction similarity (EDS) map will be generated based on the corresponding pair of EWMs and EDMs, respectively. The three generated similarity measurement maps will be combined to yield one measurement map, which is used as the input of the third, and the last, stage to compute the final ESIM score using our proposed edge-width-based pooling process.

Download

We have decided to make the data set available to the research community free of charge. You can download the image database as well as the supporting file. If you use these images in your research, we kindly ask that you reference this website and our paper listed below.

Zhangkai Ni, Lin Ma, Huanqiang Zeng, Jing Chen, Canhui Cai, Kai-Kuang Ma, "ESIM: Edge Similarity for Screen Content Image Quality Assessment", IEEE Transactions on Image Processing, vol. 26, no. 10, pp. 4818-4831, Oct. 2017.

You can also download the SCID as well as the supporting file via the OneDrive:

Download SCID

For requiring the code, please feel free to contact Dr. Zhangkai Ni (eezkni@gmail.com).

Copyright

Copyright (c) 2017 The Huaqiao University

All rights reserved. Permission is hereby granted, without written agreement and without license or royalty fees, to use, copy, and distribute this database

(the images, the results, and the source files) and its documentation for non-commercial research and educational purposes only, provided that the copyright notice in its entirety appear in all copies of this database,

and the original source of this database, Smart Visual Information Processing Laboratory (SmartVIPLab, http://smartviplab.org/index.html)

at The Huaqiao University, is acknowledged in any publication that reports research using this database.

Contact Me

If you have any questions, please feel free to contact Dr. Zhangkai Ni (eezkni@gmail.com)

Back to top